Threat Intelligence Triage

Security teams face an impossible volume of alerts. Traditional tools apply rigid rules that either miss subtle threats or drown analysts in false positives. This solution shows how to build a triage system that learns from your team’s expertise—automatically improving its judgment over time.

The Problem

Section titled “The Problem”Every security team knows these failure modes:

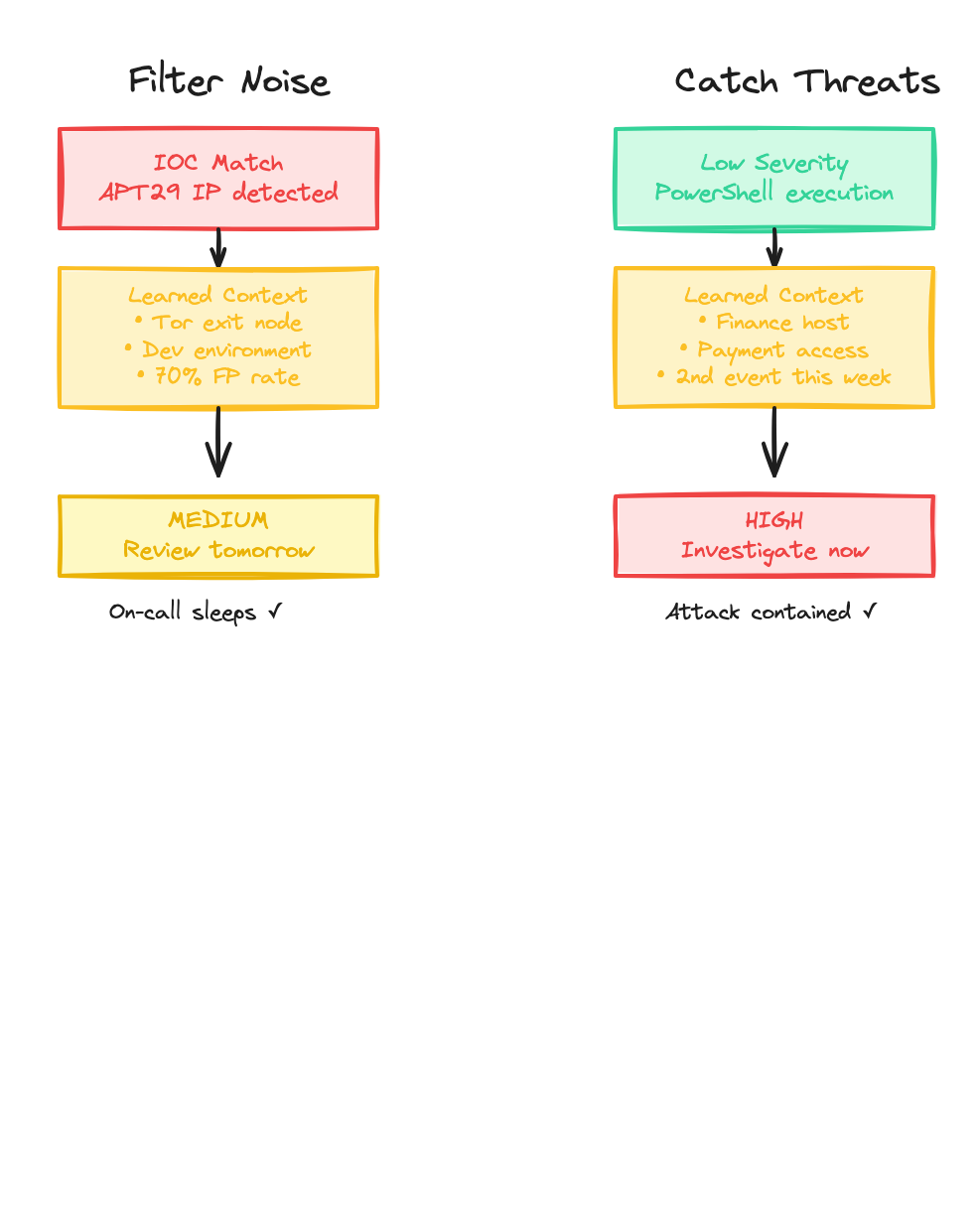

Alert fatigue: Your SIEM pages on-call at 3am for an IOC match. Two hours later, it’s confirmed as a Tor exit node hitting a dev server. Another false positive. Analysts start ignoring alerts.

Missed threats: A “low severity” PowerShell event sits at position #347 in the queue. Three days later, ransomware detonates. The subtle indicators were there—but buried in noise.

Traditional tools can’t solve this because they lack context and can’t learn. They treat every IOC match the same, regardless of what the target is, what the historical false positive rate is, or what your team has learned from past incidents.

How Intelligent Triage Works

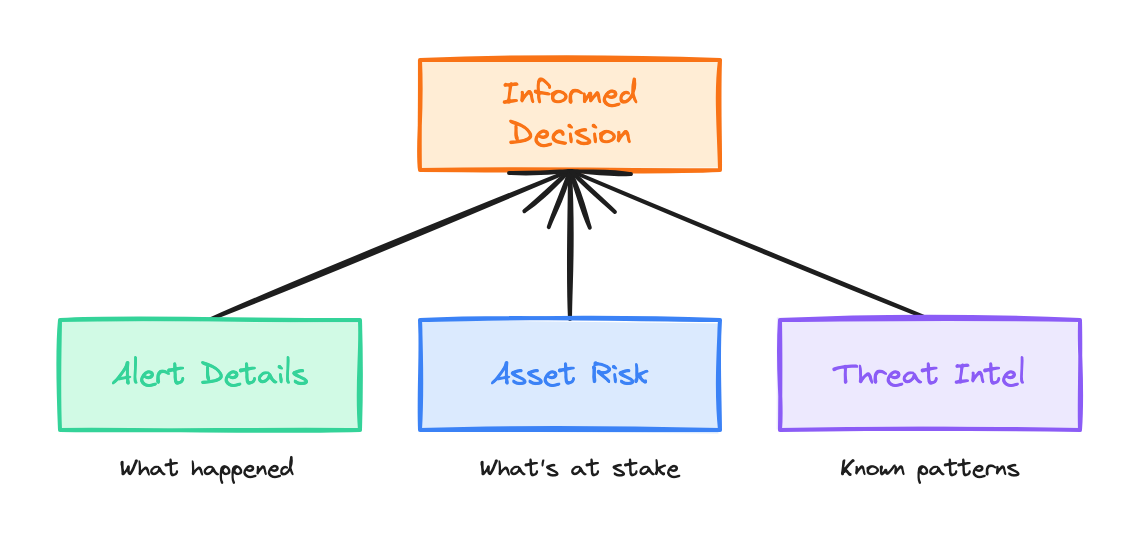

Section titled “How Intelligent Triage Works”The system combines three things traditional SIEMs lack:

-

Contextual awareness — The system knows not just that an alert fired, but what asset was targeted, how critical it is, who owns it, and what threat intelligence says about the indicators involved.

-

Learned judgment — Through analyst feedback, the system learns what your team considers noise vs. real threats. It internalizes your risk tolerance, attribution standards, and escalation preferences.

-

Continuous improvement — As analysts correct decisions, those corrections compound into systematic improvements. The system gets smarter over time, not just more rules.

What the System Learns

Section titled “What the System Learns”The same system handles both directions—reducing noise AND catching subtle threats—because it’s learned what your team actually cares about.

The Data Foundation

Section titled “The Data Foundation”Intelligent triage requires three categories of data, stored as structured tables in a dataset.

Security Events

Section titled “Security Events”Your SIEM, EDR, or detection systems feed events into an events table:

| event_id | timestamp | event_type | source_ip | dest_ip | dest_hostname | severity | raw_log |

|---|---|---|---|---|---|---|---|

| evt-001 | 2024-01-15T03:42:00Z | ssh_brute_force | 185.220.101.42 | 10.0.1.50 | dev-server-03 | HIGH | Failed SSH attempts… |

| evt-002 | 2024-01-15T09:15:00Z | powershell_anomaly | — | 10.0.2.100 | fin-admin-ws | LOW | Encoded PowerShell… |

| evt-003 | 2024-01-15T11:30:00Z | malware_callback | 10.0.3.25 | 91.234.56.78 | marketing-pc | CRITICAL | C2 beacon detected… |

Each row captures the raw detection with its original severity—before any contextual analysis.

Asset Inventory

Section titled “Asset Inventory”Your CMDB or asset management system populates an assets table:

| hostname | ip_address | environment | criticality | data_classification | owner | business_unit |

|---|---|---|---|---|---|---|

| dev-server-03 | 10.0.1.50 | development | low | internal | Platform Team | Engineering |

| fin-admin-ws | 10.0.2.100 | production | critical | pii, financial | J. Martinez | Finance |

| marketing-pc | 10.0.3.25 | production | medium | internal | S. Chen | Marketing |

When an alert fires, the system joins against this table to understand what’s actually at stake. A “HIGH” severity alert hitting a dev server is very different from the same alert hitting a finance workstation.

Threat Intelligence

Section titled “Threat Intelligence”Structured indicators populate an IOCs table:

| indicator | indicator_type | threat_actor | confidence | first_seen | tags |

|---|---|---|---|---|---|

| 185.220.101.42 | ipv4 | APT29 | medium | 2023-06-15 | tor_exit_node, cozy_bear |

| 91.234.56.78 | ipv4 | FIN7 | high | 2024-01-10 | carbanak, c2_server |

| encoded_ps_loader.ps1 | file_hash | Unknown | low | 2024-01-12 | powershell, obfuscated |

Beyond structured IOCs, unstructured threat intelligence—reports, TTPs, incident post-mortems—goes into a knowledge base for semantic search. When the system sees an APT29 indicator, it can retrieve context about that actor’s typical targets, techniques, and historical false positive patterns.

The Triage Pipeline

Section titled “The Triage Pipeline”The pipeline takes each security event and produces a structured triage decision. Here’s a simplified configuration:

name: security-event-triagehandler: language_model

inputs: event: # The raw security event asset: # Joined asset context ioc_matches: # Any matching threat intel threat_context: # Retrieved from knowledge base

outputs: adjusted_severity: enum [CRITICAL, HIGH, MEDIUM, LOW, INFORMATIONAL] confidence: number (0-100) reasoning: string recommended_action: enum [page_oncall, investigate_urgent, investigate_normal, log_only] threat_assessment: likely_threat_actor: string | null attribution_confidence: enum [high, medium, low, none] attack_stage: enum [reconnaissance, initial_access, execution, persistence, lateral_movement, exfiltration, unknown]The system prompt instructs the model to weigh factors like asset criticality, IOC confidence, historical patterns, and threat actor TTPs—then produce a structured decision.

Walkthrough: Event evt-001

Section titled “Walkthrough: Event evt-001”Let’s trace how the SSH brute force event flows through:

1. Event arrives:

event_id: evt-001event_type: ssh_brute_forcesource_ip: 185.220.101.42dest_hostname: dev-server-03original_severity: HIGH2. Asset context joined:

environment: developmentcriticality: lowdata_classification: internal3. IOC matches found:

indicator: 185.220.101.42threat_actor: APT29confidence: mediumtags: [tor_exit_node, cozy_bear]4. Knowledge base retrieval:

“APT29 (Cozy Bear) typically targets government and diplomatic entities. IP 185.220.101.42 is a known Tor exit node used by multiple actors. Attribution to APT29 based solely on this IP should be considered low confidence…”

5. Triage decision:

adjusted_severity: MEDIUMconfidence: 85reasoning: | Downgraded from HIGH to MEDIUM. While the source IP matches APT29 threat intel, it's a known Tor exit node (attribution unreliable). Target is a development server with low criticality and no sensitive data. SSH brute force against dev infrastructure is common noise.recommended_action: investigate_normalthreat_assessment: likely_threat_actor: null # Can't attribute via Tor attribution_confidence: none attack_stage: reconnaissanceThe analyst reviews this in the morning, confirms the reasoning, and moves on in 10 minutes instead of a 3am wake-up.

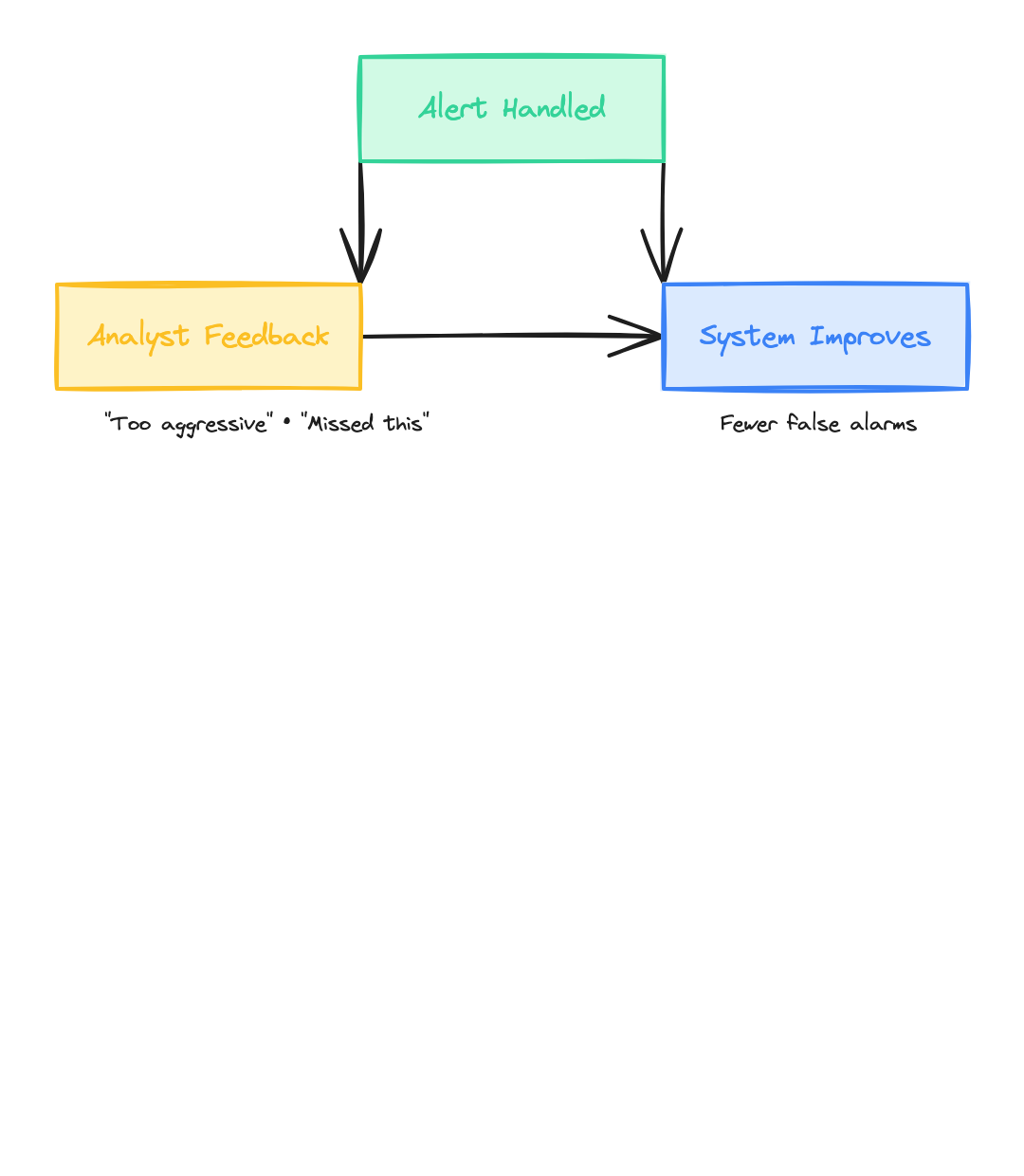

The Improvement Loop

Section titled “The Improvement Loop”The real power isn’t in the initial setup—it’s in how the system improves over time.

Signals: Capturing Expert Corrections

Section titled “Signals: Capturing Expert Corrections”When an analyst reviews a triage decision and spots something wrong—or almost right—that feedback becomes a signal:

| signal_id | execution_id | rating | comment |

|---|---|---|---|

| sig-001 | exec-442 | 👎 | “Over-attributed to APT29. This is a Tor exit node—could be anyone. Need more indicators before naming threat actors.” |

| sig-002 | exec-443 | 👍 | “Good catch on the finance workstation. This pattern is exactly what we saw in last month’s incident.” |

| sig-003 | exec-445 | 👎 | “Missed that this host had two anomalies this week. Should have escalated.” |

The best signals include context about why the decision was off, not just that it was. “Too aggressive” is useful. “Too aggressive because this IP is a known Tor exit node, so we can’t attribute to APT29 without additional indicators” is much more valuable—the system can learn from the reasoning.

Examples: Encoding Expert Judgment

Section titled “Examples: Encoding Expert Judgment”While signals capture corrections, examples capture complete expert reasoning. When you promote a good triage decision to an example, you’re saying: “This decision—the inputs, the reasoning, the output—represents how our team wants to handle this type of situation.”

Example: Tor Exit Node Downgrade

# Input contextevent_type: ssh_brute_forcesource_ip: 185.220.101.42ioc_match: APT29 (medium confidence)ioc_tags: [tor_exit_node]asset_criticality: lowasset_environment: development

# Expected outputadjusted_severity: MEDIUMattribution_confidence: nonereasoning: "Tor exit node - cannot reliably attribute. Dev server - low impact."recommended_action: investigate_normalExample: Critical Asset Escalation

# Input contextevent_type: powershell_anomalyoriginal_severity: LOWasset_criticality: criticalasset_data_classification: [pii, financial]recent_anomalies_on_host: 2

# Expected outputadjusted_severity: HIGHreasoning: "Low-severity event but critical asset with recent anomalies. Escalate."recommended_action: investigate_urgentBuild examples that cover your key scenarios: high-confidence attributions, false positive patterns, edge cases where context changes everything, and situations requiring specific response actions.

Synthesis: Turning Patterns into Improvements

Section titled “Synthesis: Turning Patterns into Improvements”Rather than manually reading through dozens of analyst comments, synthesis analyzes patterns and proposes specific changes:

Synthesis Run #47 — Analyzed 23 signals from the past 2 weeks

| Pattern Found | Proposed Change |

|---|---|

| 8 signals flagged over-attribution to threat actors when source is Tor exit node | Add instruction: “When source IP is tagged as tor_exit_node, set attribution_confidence to ‘none’ regardless of threat actor matches.” |

| 5 signals noted missed escalations when host had multiple recent anomalies | Add instruction: “When target host has 2+ anomalous events in trailing 7 days, increase severity by one level.” |

| 3 signals praised good handling of after-hours finance alerts | Promote execution exec-892 to example set (captures correct escalation pattern) |

You review the proposed changes and decide whether to apply them. Each applied change updates the pipeline’s behavior—no code changes required.

The Compounding Effect

Section titled “The Compounding Effect”Over weeks and months, the system internalizes your team’s specific:

- Risk calibration — What severity levels trigger which responses

- Attribution standards — When to name actors vs. hedge with “possible” or “indicators consistent with”

- Escalation judgment — Which combinations of factors warrant waking someone up at 3am

- Response priorities — What actions matter most for your environment

The system doesn’t just get more accurate—it gets more aligned with how your team thinks about security.

Measuring Success

Section titled “Measuring Success”How do you know the system is improving? Track these metrics:

| Metric | What It Measures | Target |

|---|---|---|

| False positive rate | Alerts escalated that turned out to be noise | Decreasing over time |

| Mean time to triage | How long events sit before getting reviewed | Decreasing for high-priority |

| After-hours pages | 3am wake-ups for non-critical issues | Decreasing |

| Missed threat rate | Real incidents that weren’t escalated | Zero or near-zero |

| Analyst corrections | Signals indicating wrong decisions | Decreasing over time |

Run regular evaluations against your example set to catch regressions and track alignment improvements.

Implementation

Section titled “Implementation”Building this system requires:

- Data integration — Connect your SIEM events, asset inventory, and threat intelligence feeds

- Knowledge base setup — Index your threat reports and IOC data for semantic search

- Pipeline configuration — Define the triage logic and output schema

- Feedback integration — Build workflows for analysts to provide signals

- Synthesis cycles — Establish a cadence for reviewing and applying improvements

Component Summary

Section titled “Component Summary”| Component | Role in Threat Triage |

|---|---|

| Dataset | Contains structured security data (events, IOCs, assets) |

| Tables | Schema-defined storage for events, indicators, and inventory |

| Files | Threat reports, runbooks, CVE bulletins |

| Knowledge Base | Semantic search over threat intel for contextual retrieval |

| Pipeline | Makes triage/notification decisions using LLM judgment |

| Signals | Analyst feedback on triage decisions |

| Examples | Ground truth decisions for evaluation and learning |

| Evaluations | Measure triage accuracy against expert examples |

| Synthesis | Analyze feedback patterns and propose improvements |