Evaluation Workflow

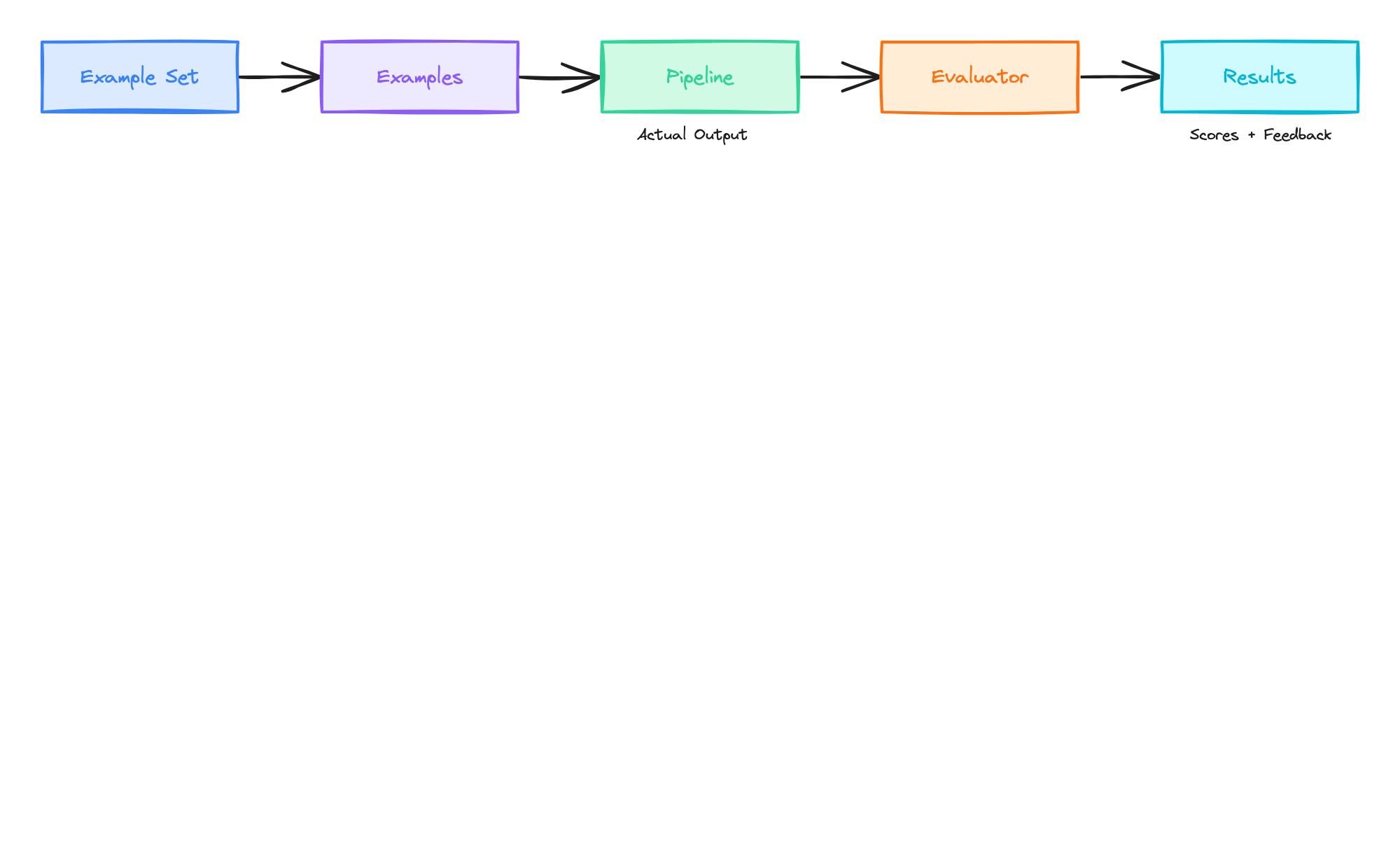

This guide walks through the complete workflow for measuring and improving pipeline quality: creating ground truth data, running evaluations, and interpreting results.

Overview

Section titled “Overview”The evaluation workflow consists of four steps:

- Create an example set - Define the schema for your ground truth data

- Add examples - Populate with input/output pairs

- Run an evaluation - Compare pipeline outputs against expected outputs

- Analyze results - Identify areas for improvement

Prerequisites

Section titled “Prerequisites”- An active pipeline to evaluate

- Understanding of what correct outputs look like for your use case

Step 1: Create an Example Set

Section titled “Step 1: Create an Example Set”First, create an example set that matches your pipeline’s input/output structure:

Create example set

curl -X POST https://api.catalyzed.ai/example-sets \ -H "Authorization: Bearer $API_TOKEN" \ -H "Content-Type: application/json" \ -d '{ "teamId": "ZkoDMyjZZsXo4VAO_nJLk", "name": "Document Summarization Test Suite", "description": "Ground truth for testing document summarization quality", "inputsSchema": { "files": [], "datasets": [], "dataInputs": [ { "id": "document", "name": "Document Text", "type": "string", "required": true } ] }, "outputsSchema": { "files": [], "datasets": [], "dataInputs": [ { "id": "summary", "name": "Summary", "type": "string", "required": true } ] } }'const exampleSetResponse = await fetch("https://api.catalyzed.ai/example-sets", { method: "POST", headers: { Authorization: `Bearer ${apiToken}`, "Content-Type": "application/json", }, body: JSON.stringify({ teamId: "ZkoDMyjZZsXo4VAO_nJLk", name: "Document Summarization Test Suite", description: "Ground truth for testing document summarization quality", inputsSchema: { files: [], datasets: [], dataInputs: [ { id: "document", name: "Document Text", type: "string", required: true }, ], }, outputsSchema: { files: [], datasets: [], dataInputs: [ { id: "summary", name: "Summary", type: "string", required: true }, ], }, }),});const exampleSet = await exampleSetResponse.json();const exampleSetId = exampleSet.exampleSetId;example_set_response = requests.post( "https://api.catalyzed.ai/example-sets", headers={"Authorization": f"Bearer {api_token}"}, json={ "teamId": "ZkoDMyjZZsXo4VAO_nJLk", "name": "Document Summarization Test Suite", "description": "Ground truth for testing document summarization quality", "inputsSchema": { "files": [], "datasets": [], "dataInputs": [ {"id": "document", "name": "Document Text", "type": "string", "required": True} ] }, "outputsSchema": { "files": [], "datasets": [], "dataInputs": [ {"id": "summary", "name": "Summary", "type": "string", "required": True} ] } })example_set = example_set_response.json()example_set_id = example_set["exampleSetId"]Step 2: Add Examples

Section titled “Step 2: Add Examples”Add ground truth examples to your set. Each example needs an input and the expected output:

Add examples

# Example 1: Financial reportcurl -X POST "https://api.catalyzed.ai/example-sets/$EXAMPLE_SET_ID/examples" \ -H "Authorization: Bearer $API_TOKEN" \ -H "Content-Type: application/json" \ -d '{ "name": "Q4 Financial Report", "input": { "document": "Q4 2024 Results: Revenue increased 15% year-over-year to $2.3M. Customer acquisition grew 22% with 145 new enterprise clients. Churn remained stable at 3.2%. Average contract value reached $180K, up $45K from Q3." }, "expectedOutput": { "summary": "Q4 2024: Revenue up 15% YoY ($2.3M), 145 new enterprise clients (+22% acquisition), 3.2% churn, $180K ACV (+$45K from Q3)." }, "rationale": "Summary should capture all key metrics in a concise format." }'

# Example 2: Product announcementcurl -X POST "https://api.catalyzed.ai/example-sets/$EXAMPLE_SET_ID/examples" \ -H "Authorization: Bearer $API_TOKEN" \ -H "Content-Type: application/json" \ -d '{ "name": "Product Launch Announcement", "input": { "document": "Today we announce the launch of DataSync Pro, our new enterprise data synchronization platform. DataSync Pro offers real-time bidirectional sync, supports 50+ connectors, and includes SOC 2 Type II compliance out of the box. Pricing starts at $500/month." }, "expectedOutput": { "summary": "Launched DataSync Pro: enterprise data sync with real-time bidirectional sync, 50+ connectors, SOC 2 compliance. Starting at $500/month." }, "rationale": "Summary should include product name, key features, and pricing." }'const examples = [ { name: "Q4 Financial Report", input: { document: "Q4 2024 Results: Revenue increased 15% year-over-year...", }, expectedOutput: { summary: "Q4 2024: Revenue up 15% YoY ($2.3M), 145 new enterprise clients...", }, rationale: "Summary should capture all key metrics in a concise format.", }, { name: "Product Launch Announcement", input: { document: "Today we announce the launch of DataSync Pro...", }, expectedOutput: { summary: "Launched DataSync Pro: enterprise data sync with real-time...", }, rationale: "Summary should include product name, key features, and pricing.", },];

for (const example of examples) { await fetch(`https://api.catalyzed.ai/example-sets/${exampleSetId}/examples`, { method: "POST", headers: { Authorization: `Bearer ${apiToken}`, "Content-Type": "application/json", }, body: JSON.stringify(example), });}examples = [ { "name": "Q4 Financial Report", "input": {"document": "Q4 2024 Results: Revenue increased 15% year-over-year..."}, "expectedOutput": {"summary": "Q4 2024: Revenue up 15% YoY ($2.3M)..."}, "rationale": "Summary should capture all key metrics in a concise format." }, { "name": "Product Launch Announcement", "input": {"document": "Today we announce the launch of DataSync Pro..."}, "expectedOutput": {"summary": "Launched DataSync Pro: enterprise data sync..."}, "rationale": "Summary should include product name, key features, and pricing." }]

for example in examples: requests.post( f"https://api.catalyzed.ai/example-sets/{example_set_id}/examples", headers={"Authorization": f"Bearer {api_token}"}, json=example )Step 3: Run an Evaluation

Section titled “Step 3: Run an Evaluation”Run the evaluation against your pipeline. You’ll need to map example fields to pipeline fields:

Run evaluation

curl -X POST "https://api.catalyzed.ai/pipelines/$PIPELINE_ID/evaluate" \ -H "Authorization: Bearer $API_TOKEN" \ -H "Content-Type: application/json" \ -d '{ "exampleSetId": "'"$EXAMPLE_SET_ID"'", "evaluatorType": "llm_judge", "evaluatorConfig": { "threshold": 0.7, "criteria": "Evaluate based on: 1) All key facts included, 2) Concise format, 3) No hallucinated information" }, "mappingConfig": { "inputMappings": [ { "exampleSlotId": "document", "pipelineSlotId": "input_text" } ], "outputMappings": [ { "exampleSlotId": "summary", "pipelineSlotId": "output_summary" } ] } }'const evalResponse = await fetch( `https://api.catalyzed.ai/pipelines/${pipelineId}/evaluate`, { method: "POST", headers: { Authorization: `Bearer ${apiToken}`, "Content-Type": "application/json", }, body: JSON.stringify({ exampleSetId: exampleSetId, evaluatorType: "llm_judge", evaluatorConfig: { threshold: 0.7, criteria: "Evaluate based on: 1) All key facts included, 2) Concise format, 3) No hallucinated information", }, mappingConfig: { inputMappings: [ { exampleSlotId: "document", pipelineSlotId: "input_text" }, ], outputMappings: [ { exampleSlotId: "summary", pipelineSlotId: "output_summary" }, ], }, }), });const evaluation = await evalResponse.json();const evaluationId = evaluation.evaluationId;eval_response = requests.post( f"https://api.catalyzed.ai/pipelines/{pipeline_id}/evaluate", headers={"Authorization": f"Bearer {api_token}"}, json={ "exampleSetId": example_set_id, "evaluatorType": "llm_judge", "evaluatorConfig": { "threshold": 0.7, "criteria": "Evaluate based on: 1) All key facts included, 2) Concise format, 3) No hallucinated information" }, "mappingConfig": { "inputMappings": [ {"exampleSlotId": "document", "pipelineSlotId": "input_text"} ], "outputMappings": [ {"exampleSlotId": "summary", "pipelineSlotId": "output_summary"} ] } })evaluation = eval_response.json()evaluation_id = evaluation["evaluationId"]Wait for Completion

Section titled “Wait for Completion”Poll until the evaluation completes:

async function waitForEvaluation(evaluationId: string) { while (true) { const response = await fetch( `https://api.catalyzed.ai/evaluations/${evaluationId}`, { headers: { Authorization: `Bearer ${apiToken}` } } ); const evaluation = await response.json();

if (evaluation.status === "succeeded") { return evaluation; } if (evaluation.status === "failed") { throw new Error(evaluation.errorMessage); }

console.log(`Status: ${evaluation.status}...`); await new Promise(r => setTimeout(r, 3000)); }}

const completedEval = await waitForEvaluation(evaluationId);console.log(`Score: ${completedEval.aggregateScore}`);console.log(`Passed: ${completedEval.passedCount}/${completedEval.totalExamples}`);Step 4: Analyze Results

Section titled “Step 4: Analyze Results”View Aggregate Results

Section titled “View Aggregate Results”The completed evaluation includes summary statistics:

{ "evaluationId": "EvR8I6rHBms3W4Qfa2-FN", "status": "succeeded", "totalExamples": 25, "passedCount": 21, "failedCount": 3, "errorCount": 1, "aggregateScore": 0.84}Examine Individual Results

Section titled “Examine Individual Results”Fetch per-example results to understand specific failures:

Get evaluation results

curl "https://api.catalyzed.ai/evaluations/$EVALUATION_ID/results?statuses=failed,error" \ -H "Authorization: Bearer $API_TOKEN"const resultsResponse = await fetch( `https://api.catalyzed.ai/evaluations/${evaluationId}/results?statuses=failed,error`, { headers: { Authorization: `Bearer ${apiToken}` } });const { evaluationResults } = await resultsResponse.json();

for (const result of evaluationResults) { console.log(`Example: ${result.exampleId}`); console.log(`Score: ${result.score}`); console.log(`Feedback: ${result.feedback}`); console.log("---");}results_response = requests.get( f"https://api.catalyzed.ai/evaluations/{evaluation_id}/results", params={"statuses": "failed,error"}, headers={"Authorization": f"Bearer {api_token}"})results = results_response.json()["evaluationResults"]

for result in results: print(f"Example: {result['exampleId']}") print(f"Score: {result['score']}") print(f"Feedback: {result['feedback']}") print("---")Each result includes:

score- Numerical score (0.0-1.0)feedback- Evaluator’s explanationactualOutput- What the pipeline producedmappedInput- Input that was sent to the pipeline

Common Issues to Look For

Section titled “Common Issues to Look For”| Pattern | Possible Cause | Action |

|---|---|---|

| Consistently low scores | Pipeline configuration issues | Review system prompt, parameters |

| Specific example types fail | Missing training data for edge cases | Add more examples of that type |

| High variance in scores | Inconsistent evaluation criteria | Refine evaluation criteria |

| Errors on certain inputs | Input format incompatibility | Check mapping configuration |

Next Steps

Section titled “Next Steps”After analyzing results, you have several options:

Improve the Pipeline

Section titled “Improve the Pipeline”- Adjust configuration - Update system prompts, model parameters

- Run synthesis - Use synthesis runs to get AI-suggested improvements

Improve the Test Suite

Section titled “Improve the Test Suite”- Add more examples - Expand coverage of edge cases

- Refine expected outputs - Make examples more representative

- Update rationale - Document what makes outputs correct

Continuous Evaluation

Section titled “Continuous Evaluation”Set up regular evaluations to track quality over time:

// Run weekly evaluationasync function runWeeklyEvaluation(pipelineId: string, exampleSetId: string) { const evaluation = await startEvaluation(pipelineId, exampleSetId); const completed = await waitForEvaluation(evaluation.evaluationId);

// Alert if quality drops if (completed.aggregateScore < 0.8) { console.warn(`Quality alert: Score dropped to ${completed.aggregateScore}`); // Send notification, create ticket, etc. }

return completed;}Related Guides

Section titled “Related Guides”- Promoting Executions - Build example sets from production data

- Feedback Loops - Capture signals and run synthesis

- Example Sets - Detailed example set documentation

- Evaluations - Evaluation API reference